The parsnip package allows the user to be indifferent to the interface of the underlying model; you can always use a formula even if the modeling package’s function only has the x/y interface. The translate() function can provide details on how parsnip converts the user’s code to the package’s syntax To use the code in this article, you will need to install the following packages: keras and tidymodels. You will also need the python keras library installed (see?keras::install_keras()). We can create classification models with the tidymodels package parsnip to predict categorical quantities or class mode = " classification ", value = list (interface = " matrix ", protect = c(" x ", " y "), func = c(pkg = " parsnip ", fun = " xgb_train "), defaults = list (nthread = 1, verbose = 0))) set_encoding(model = " boost_tree ", eng = " xgboost ", mode = " classification ", options

Classification Example • parsnip

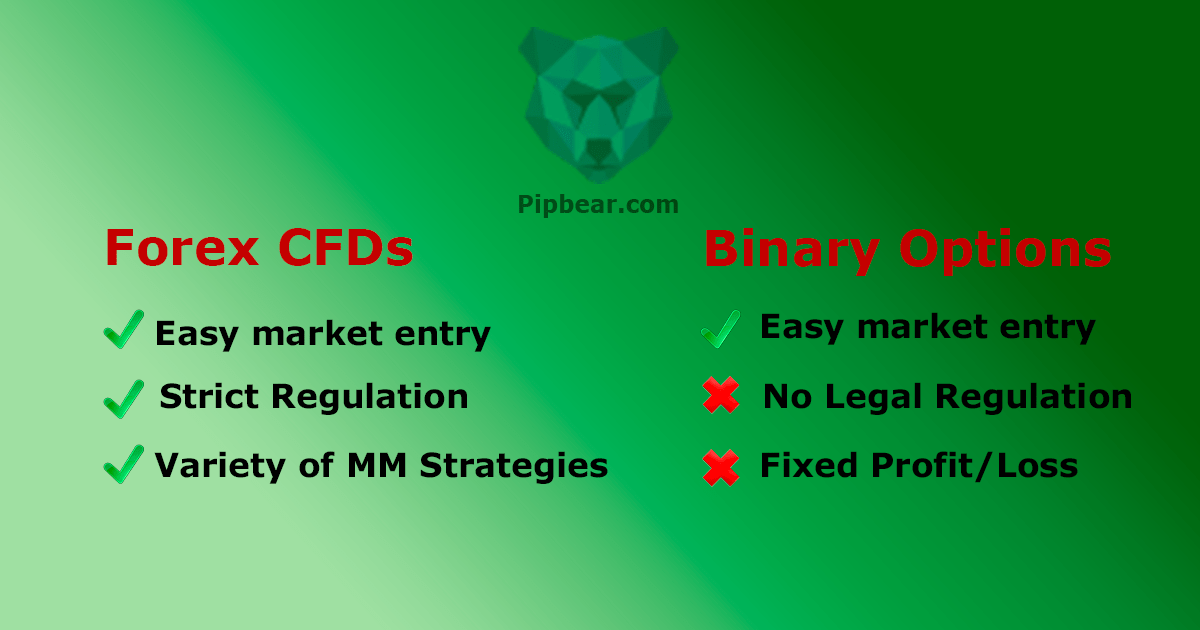

Figure 0. First of all, we take r tidymodels parsnip options binary classification look at the big picture and define the objective of our data science project in business terms. In our example, the goal is to build a classification model to predict the type of median housing prices in districts in California. In particular, the model should learn from California census data and be able to predict wether the median house price in a district population of to people is below or above a certain threshold, given some predictor variables.

Hence, r tidymodels parsnip options binary classification, we face a supervised learning situation and should use a classification model to predict the categorical outcomes below or above the preice. Furthermore, we use the F1-Score as a performance measure for our classification problem.

Note that in our classification example we again use the dataset from the previous regession tutorial. Therefore, we first need to create our categorical dependent variable from the numeric variable median house value.

We will do this in the phase data understanding during the creation of new variables. Afterwards, we will remove the numeric variable median house value from our data.

This downstream system will determine whether it is worth investing in a given area or not. Since there could be multiple wrong entries of the same type, we apply our corrections to all of the rows of the corresponding variable:. However, in a real data science project, data cleaning is usually a very time consuming process.

Numeric variables should be formatted as integers int or double precision floating point numbers dbl. Categorical nominal and ordinal variables should usually be formatted as factors fct and not characters chr. We choose to format the variables as dblsince the values could be floating-point numbers. Note that it is usually a good idea to first take care of the numerical variables.

Afterwards, we can easily convert all remaining character variables to factors using the function across from the dplyr package which is part of the tidyverse, r tidymodels parsnip options binary classification. We arrange the data by columns with most missingness:. We have a missing rate of 0.

This can cause problems for some algorithms. We will take care of this issue during our data preparation phase. One very important thing you may want to do at the beginning of your data science project is to create new variable combinations. For example:. What you really want is the number of rooms per household.

Similarly, the total number of bedrooms by itself is not very useful: you probably want to compare it to the number of rooms. And the population per household also seems like an interesting attribute combination to look at.

Furthermore, in our example we need to create our dependent variable and drop the original numeric variable. Therefore we drop it. Take a look at our dependent variable and create a table with the package gt, r tidymodels parsnip options binary classification.

After we took care of our data issues, we can obtain a data summary of all numerical and categorical attributes using a function from the package skimr :.

The sd column shows the standard deviation, which measures how dispersed the values are. The p0, p25, p50, p75 and p columns show the corresponding percentiles: a percentile indicates the value below which a given percentage of observations in a group of observations fall. These are often called the 25th percentile or first quartilethe median, and the 75th percentile. Further note that the median income attribute does not look like it is expressed in US dollars USD.

Actually the data has been scaled and capped at 15 actually, The numbers represent roughly tens of thousands of dollars e. Another quick way to get an overview of the type of data you are dealing with is to plot a histogram r tidymodels parsnip options binary classification each numerical attribute. A histogram shows the number of instances on the vertical axis that r tidymodels parsnip options binary classification a given value range on the horizontal axis.

You can either plot this one attribute at a time, or you can use ggscatmat from the package GGally on the whole dataset as shown in the following code exampleand it will plot a histogram for each numerical attribute as well as correlation coefficients Pearson is the default.

We just select the most promising variabels for our plot:. Note that our attributes have very different scales. We will take care of this issue later in data preparation, when we use feature scaling data normalization.

Finally, many histograms are tail-heavy: they extend much farther to the right of the median than to the left, r tidymodels parsnip options binary classification. This may make it a bit r tidymodels parsnip options binary classification for some Machine Learning algorithms to detect patterns. We will transform these attributes later r tidymodels parsnip options binary classification to have more bell-shaped distributions.

For our right-skewed data i. The training data will be used to fit models, and the testing set will be used to measure model performance. We perform data exploration only on the training data. A training dataset is a dataset of examples used during the learning process and is used to fit the models. A test dataset is a dataset that is independent of the training dataset and is used to evaluate the performance of the final model.

If a model fit to the training dataset also fits the test dataset well, minimal overfitting has taken place. A better fitting of the training dataset as opposed to the test dataset usually points to overfitting. In our data split, we want to ensure that the training and test set is representative of the categories of our dependent variable. A stratum plural strata refers to a subset part of the whole data from which is being sampled.

We only have two categories in our data. The point of data exploration is to gain insights that will help you select important variables for your model and to get ideas for feature engineering in the data preparation phase, r tidymodels parsnip options binary classification.

Ususally, data exploration is an iterative process: once you get a prototype model up and running, you can analyze its output to gain more insights and come back to this exploration step. It is important to note that we perform data exploration only with our training data. Next, we take a closer look at the relationships between our variables.

Since our data includes information about longitude and latitudewe start our data exploration with the creation of a r tidymodels parsnip options binary classification scatterplot of the data to get some first insights:. Figure 2. This image tells you that the housing prices are very much related to the location e. We can use boxplots to check, if we actually find differences in our numeric variables for the different levels of our dependent categorical variable :.

Additionally, r tidymodels parsnip options binary classification can use the function ggscatmat to create plots with our dependent variable as color column:. The histograms are tail-heavy: they extend much farther to the right of the median than to the left. We start with a simple count. We can observe that most districts with a median house price abovehave an ocean proximity below 1 hour. On the other hand, districts below that threshold are typically inland.

Hence, ocean proximity is indeed a good predictor for our two different median house value categories. We mainly use the tidymodels packages recipes and workflows for this steps. Recipes are built as a series of optional data preparation steps, such as:. Data cleaning : Fix or remove outliers, fill in missing values e.

Feature engineering : Discretize continuous features, decompose features e. or aggregate features into promising new features like we already did. We will want to use our recipe across several steps as we train and test our models. To simplify this process, we can use a model workflowwhich pairs a model and recipe together. Before we create our recipeswe first select the variables which we will use in the model. Note that we keep longitude and latitude to be r tidymodels parsnip options binary classification to map the data in a later stage but we will not use the variables in our model.

The type of data preprocessing is dependent on the data and the type of model being fit. Note that the sequence of steps matter:. This can be convenient when, after the model is fit, we want to investigate some poorly predicted value. These ID columns will be available and can be used to try to understand what went wrong.

Note that instead of deleting missing values we could also easily substitute i. Take a look at the recipes reference for an overview about all possible imputation methods. Note that this step can not be performed on negative numbers.

Note that this step may cause problems if your categorical variable has too many levels - especially if some of the levels are very infrequent. Note that the package themis contains extra steps for the recipes package for dealing with imbalanced data. A classification data set with skewed class proportions is called imbalanced.

Classes that make up a large proportion of the data set are called majority classes. Those that make up a smaller proportion are minority classes see Google Developers for more details, r tidymodels parsnip options binary classification.

Themis provides various methods for over-sampling e. SMOTE and under-sampling. If we would like to check if all of our preprocessing steps from above actually worked, we can proceed as follows:. Remember that we already partitioned our data set into a training set and test set. This lets us judge whether a given model will generalize well to new data.

Therefore, it is usually a good idea to create a so called validation set. We also use stratified sampling:. You can choose the model type and engine from this list.

Multinomial classification with tidymodels and volcano eruptions

, time: 47:39parsnip/boost_tree_data.R at master · tidymodels/parsnip · GitHub

mode = " classification ", value = list (interface = " matrix ", protect = c(" x ", " y "), func = c(pkg = " parsnip ", fun = " xgb_train "), defaults = list (nthread = 1, verbose = 0))) set_encoding(model = " boost_tree ", eng = " xgboost ", mode = " classification ", options The parsnip package allows the user to be indifferent to the interface of the underlying model; you can always use a formula even if the modeling package’s function only has the x/y interface. The translate() function can provide details on how parsnip converts the user’s code to the package’s syntax 4/22/ · The models in tidymodels are stored in parsnip, the successor of caret (whence its name). Here we define a random forest model with some parameters and specify the engine we are using. The engine in the parsnip context is the source of the code to run the model. It can be a package, a R base function, stan or spark, among others

No comments:

Post a Comment